Before explaining how testing is done, let us place it in a wider context. At about the time that the IS community discovered the high cost of error correction, the United States Department of Defense devised a set of processes to control the work of contractors on large, complex weapons systems procurement projects. Because of the increasing software content of modern weapons systems, these processes were naturally adapted to the world of software development. They also put testing in proper perspective as one means among many to ensure software quality.

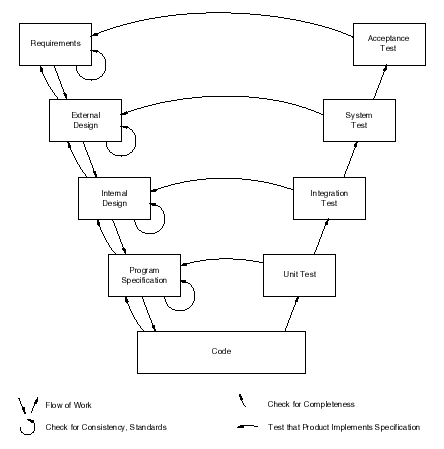

Because of the shape of the graphical representation of these processes, the name V model was coined. An example of a V-model that applies to business information systems development is depicted in Figure 3.7.

The left-hand side of a V depicts activities of decomposition: going from a general objective to more and more detailed descriptions of simpler and simpler artifacts (such as program code in a business system). The right-hand side depicts integration: the assemblage into larger and larger components of the individual pieces of code created at the bottom angle. As each assembly or subassembly is completed, it is tested, not only to ensure that it was put together correctly, but that it was also designed correctly (at the corresponding level on the left-hand side of the V).

The final characteristic to be noted is that each phase corresponds to a hand-off from one team to another (or from a contractor to a subcontractor, in the original Department of Defense version of the V model). In the case of systems development, what is handed off is a set of deliverables: a requirements or design document, a piece of code, or a test model. The hand-off is only allowed to take place after an inspection (verification and validation)of the deliverables proves that they meet a set of predefined exit criteria. If the inspection is not satisfactory, the corresponding deliverables are scrapped and reworked.

Let us define verification and validation. Verification checks that a deliverable is correctly derived from the inputs of the corresponding activity and is internally consistent. In addition, it checks that both the output and the process conform to standards. Verification is most commonly accomplished through an inspection. Inspections involve a number of reviewers, each with specific responsibilities for verifying aspects of the deliverable, such as functional completeness, adherence to standards, and correct use of the technology infrastructure.

Validationchecks that the deliverables satisfy the requirements specified at the beginning, and that the business case continues to be met; in other words, validation ensures that the work product is within scope, contributes to the intended benefits, and does not have undesired side effects. Validation is most commonly accomplished through inspections, simulation, and prototyping. An effective technique of validation is the use of traceability matrices, where each component of the system is cross-referenced to the requirements that it fulfills. The traceability matrix allows you to pinpoint any requirement that has not been fulfilled and also those requirement that need several components to be implemented (which is likely to cause problems in maintenance.)

Detailed descriptions of various do’s and don’ts of inspections are available from most systems analysis and design textbooks, as well as from the original work by Ed Yourdon. (reference here)

Testing, the third component of the V model, applies to the right-hand side of the V. The most elementary test of software is the unit test, performed by a programmer on a single unit of code such as a module or a program (a method or a class if you are using object-oriented programming). Later on, multiple units of software are combined and tested together in what is called an integration test. When the entire application has been integrated, you conduct a system test to make sure that the application works in the business setting of people and business processes using the software itself.

The purpose of a unit test is to check that the program has been coded in accordance with its design, i.e., that it meets its specifications (quality definition 1 – see Chapter 1). It is not the purpose of the unit test to check that the design is correct, only that the code implements it. When the unit test has been completed, the program is ready for verification and validation against the programming exit criteria (one of which is the successful completion of a unit test). Once it passes the exit criteria, the program is handed off to the next phase of integration.

The purpose of integration testing, the next level, is to check that a set of programs work together as intended by the application design. Again, we are testing an artifact (an application, a subsystem, a string of programs) against its specification; the purpose is not to test that the system meets its requirements. Nor is the purpose to repeat the unit tests: the programs that enter into the integration test have been successfully unit-tested and therefore are deemed to be a correct implementation of the program design. As for unit tests, the software is handed off to the next level of testing once the integration test is complete and the exit criteria for integration testing are met.

The purpose of system testing, the next level, is to check that the entire business information system fulfills the requirements resulting from the analysis phase. When system testing is complete and the exit criteria for system testing are met, the system is handed off to IS operations and to the system users.

- 6207 reads