Perhaps you remember watching a movie or being at a show in which an animal—maybe a dog, a horse, or a dolphin—did some pretty amazing things. The trainer gave a command and the dolphin swam to the bottom of the pool, picked up a ring on its nose, jumped out of the water through a hoop in the air, dived again to the bottom of the pool, picked up another ring, and then took both of the rings to the trainer at the edge of the pool. The animal was trained to do the trick, and the principles of operant conditioning were used to train it. But these complex behaviors are a far cry from the simple stimulus-response relationships that we have considered thus far. How can reinforcement be used to create complex behaviors such as these?

One way to expand the use of operant learning is to modify the schedule on which the reinforcement is applied. To this point we have only discussed a continuous reinforcement schedule, in which thedesired responseis reinforced every time it occurs; whenever the dog rolls over, for instance, it gets a biscuit. Continuous reinforcement results in relatively fast learning but also rapid extinction of the desired behavior once the reinforcer disappears. The problem is that because the organism is used to receiving the reinforcement after every behavior, the responder may give up quickly when it doesn’t appear.

Most real-world reinforcers are not continuous; they occur on a partial (or intermittent) reinforcement schedule—a schedule in which the responses are sometimes reinforced, and sometimes not. In comparison to continuous reinforcement, partial reinforcement schedules lead to slower initial learning, but they also lead to greater resistance to extinction. Because the reinforcement does not appear after every behavior, it takes longer for the learner to determine that the reward is no longer coming, and thus extinction is slower. The four types of partial reinforcement schedules are summarized in Table 7.2.

|

Reinforcement schedule |

Explanation |

Real-worldexample |

|

Fixed-ratio |

Behavior is reinforced after a specific number of responses |

Factory workers who are paid according to the number of products they produce |

|

Variable-ratio |

Behavior is reinforced after an average, but unpredictable, number of responses |

Payoffs from slot machines and other games of chance |

|

Fixed-interval |

Behavior is reinforced for the first response after a specific amount of time has passed |

People who earn a monthly salary |

|

Variable-interval |

Behavior is reinforced for the first response after an average, but unpredictable, amount of time has passed |

Person who checks voice mail for messages |

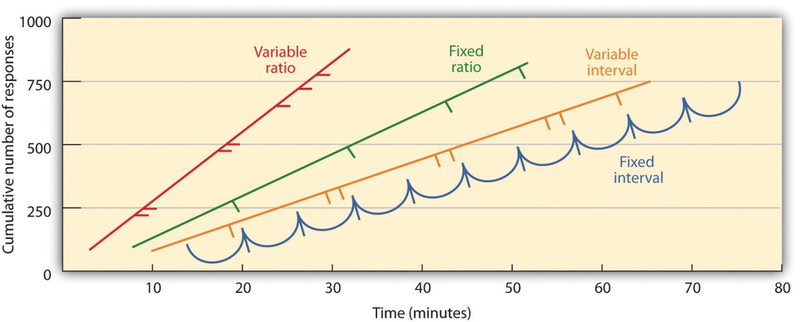

Partial reinforcement schedules are determined by whether the reinforcement is presented on the basis of the time that elapses between reinforcement (interval) or on the basis of the number of responses that the organism engages in (ratio), and by whether the reinforcement occurs on a regular (fixed) or unpredictable (variable) schedule. In a fixed-interval schedule,reinforcement occurs for the first response made after a specific amount of time has passed. For instance, on a one-minute fixed-interval schedule the animal receives a reinforcement every minute, assuming it engages in the behavior at least once during the minute. As you can see in Figure 7.3, animals under fixed-interval schedules tend to slow down their responding immediately after the reinforcement but then increase the behavior again as the time of the next reinforcement gets closer. (Most students study for exams the same way.) In a variable-interval schedule,the reinforcers appear on an interval schedule, but the timing is varied around the average interval, making the actual appearance of the reinforcer unpredictable. An example might be checking your e-mail: You are reinforced by receiving messages that come, on average, say every 30 minutes, but the reinforcement occurs only at random times. Interval reinforcement schedules tend to produce slow and steady rates of responding.

In a fixed-ratio schedule, a behavior is reinforced after a specific number of responses. For instance, a rat’s behavior may be reinforced after it has pressed a key 20 times, or a salesperson may receive a bonus after she has sold 10 products. As you can see in Figure 7.3, once the organism has learned to act in accordance with the fixed-reinforcement schedule, it will pause only briefly when reinforcement occurs before returning to a high level of responsiveness. A variable-ratio schedule provides reinforcers after a specificbut averagenumber of responses. Winning money from slot machines or on a lottery ticket are examples of reinforcement that occur on a variable-ratio schedule. For instance, a slot machine may be programmed to provide a win every 20 times the user pulls the handle, on average. As you can see in "Slot Machine"[Missing], ratio schedules tend to produce high rates of responding because reinforcement increases as the number of responses increase.

Complex behaviors are also created through shaping, theprocess of guiding an organism’s behavior to thedesired outcomethrough theuseof successiveapproximation to a final desired behavior. Skinner made extensive use of this procedure in his boxes. For instance, he could train a rat to press a bar two times to receive f ood, by first providing food when the animal moved near the bar. Then when that behavior had been learned he would begin to provide food only when the rat touched the bar. Further shaping limited the reinforcement to only when the rat pressed the bar, to when it pressed the bar and touched it a second time, and finally, to only when it pressed the bar twice. Although it can take a long time, in this way operant conditioning can create chains of behaviors that are reinforced only when they are completed.

Reinforcing animals if they correctly discriminate between similar stimuli allows scientists to test the animals’ ability to learn, and the discriminations that they can make are sometimes quite remarkable. Pigeons have been trained to distinguish between images of Charlie Brown and the other Peanuts characters (Cerella, 1980), 1 and between different styles of music a nd art (Porter & Neuringer, 1984; Watanabe, Sakamoto & Wakita, 1995). 2

Behaviors can also be trained through the use of secondaryreinforcers. Whereas a primary reinforcer includes stimuli that arenaturallypreferred or enjoyed bytheorganism, such as food, water, and relief from pain, a secondary reinforcer (sometimes called conditioned reinforcer) is a neutral event that has becomeassociated with a primaryreinforcer through classical conditioning. An example of a secondary reinforcer would be the whistle given by an animal trainer, which has been associated over time with the primary reinforcer, food. An example of a n everyday secondary reinforcer is money. We enjoy having money, not so much for the stimulus itself, but rather for the primary reinforcers (the things that money can buy) with which it is associated.

KEY TAKEAWAYS

- Edward Thorndike developed the law of effect: the principle that responses that create a typically pleasant outcome in a particular situation are more likely to occur again in a similar situation, whereas responses that produce a typically unpleasant outcome are less likely to occur again in the situation.

- B. F . Skinner expanded on Thorndike’s ideas to develop a set of principles to explain operant conditioning.

- Positive reinforcement strengthens a response by presenting something that is typically pleasant after the response, whereas negative reinforcement strengthens a response by reducing or removing something that is typically unpleasant.

- Positive punishment weakens a response by presenting something typically unpleasant after the response, whereas negative punishment weakens a response by reducing or removing something that is typically pleasant.

- Reinforcement may be either partial or continuous. Partial reinforcement schedules are determined by whether the reinforcement is presented on the basis of the time that elapses between reinforcements (interval) or on the basis of the number of responses that the organism engages in (ratio), and by whether the reinforcement occurs on a regular (fixed) or unpredictable (variable) schedule.

- Complex behaviors may be created through shaping, the process of guiding an organism’s behavior to the desired outcome through the use of successive approximation to a final desired behavior.

EXERCISES AND CRITICAL THINKING

- Give an example from daily life of each of the following: positive reinforcement, negative reinforcement, positive punishment, negative punishment.

- Consider the reinforcement techniques that you might use to train a dog to catch and retrieve a Frisbee that you throw to it.

- Watch the following two videos from current television shows. Can you determine which learning procedures are being demonstrated?

- The Office: http://www.break.com/usercontent/2009/11/the-office-altoid- experiment-1499823

- The Big Bang Theory: http://www.youtube.com/watch?v=JA96Fba-WHk

- 8310 reads