Focusing on numbers, all numbers can represented by the positional notation system. The b-ary positional representation system uses the position of digits ranging from 0 to b-1 to denote a number. The quantity b is known as the base of the number system. Mathematically, positional systems represent the positive integer n as

and we succinctly express n in base-b as nb = dN dN−1 ...d0. The number 25 in base 10 equals 2×101+5×100, so that the digits representing this number are d0 =5, d1 =2, and all other dk equal zero. This same number in binary (base 2) equals 11001 (1 × 24 +1 × 23 +0 × 22 +0 × 21 +1 × 20) and 19 in hexadecimal (base 16). Fractions between zero and one are represented the same way.

All numbers can be represented by their sign, integer and fractional parts. Complex numbers (Section 2.1) can be thought of as two real numbers that obey special rules to manipulate them. Humans use base 10, commonly assumed to be due to us having ten fingers. Digital computers use the base 2 or binary number representation, each digit of which is known as a bit (binary digit).

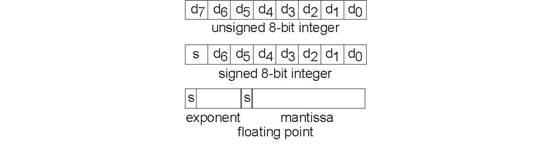

Here, each bit is represented as a voltage that is either "high" or "low," thereby representing "1" or "0," respectively. To represent signed values, we tack on a special bit the sign bit to express the sign. The computer's memory consists of an ordered sequence of bytes, a collection of eight bits. A byte can therefore represent an unsigned number ranging from 0 to 255. If we take one of the bits and make it the sign bit, we can make the same byte to represent numbers ranging from −128 to 127. But a computer cannot represent all possible real numbers. The fault is not with the binary number system; rather having only a finite number of bytes is the problem. While a gigabyte of memory may seem to be a lot, it takes an infinite number of bits to represent π. Since we want to store many numbers in a computer's memory, we are restricted to those that have a finite binary representation. Large integers can be represented by an ordered sequence of bytes. Common lengths, usually expressed in terms of the number of bits, are 16, 32, and 64. Thus, an unsigned 32-bit number can represent integers ranging between 0 and 232 − 1 (4,294,967,295), a number almost big enough to enumerate every human in the world!6

Exercise 5.2.1

For both 32-bit and 64-bit integer representations, what are the largest numbers that can be represented if a sign bit must also be included.

While this system represents integers well, how about numbers having nonzero digits to the right of the decimal point? In other words, how are numbers that have fractional parts represented? For such numbers, the binary representation system is used, but with a little more complexity. The floating-point system uses a number of bytes -typically 4 or 8 -to represent the number, but with one byte (sometimes two bytes) reserved to represent the exponent e of a power-of-two multiplier for the number -the mantissa m -expressed by the remaining bytes.

The mantissa is usually taken to be a binary fraction having a magnitude in the range  , which means that the binary representation is such that d−1 =1. 1 The number zero is an exception to this rule, and it is the only floating point number having a zero

fraction. The sign of the mantissa represents the sign of the number and the exponent can be a signed integer.

, which means that the binary representation is such that d−1 =1. 1 The number zero is an exception to this rule, and it is the only floating point number having a zero

fraction. The sign of the mantissa represents the sign of the number and the exponent can be a signed integer.

A computer's representation of integers is either perfect or only approximate, the latter situation occurring when the integer exceeds the range of numbers that a limited set of bytes can represent. Floating point representations have similar representation problems: if the number x can be multiplied/divided by enough powers of two to yield a fraction lying between 1/2 and 1 that has a finite binary-fraction representation, the number is represented exactly in foating point. Otherwise, we can only represent the number approximately, not catastrophically in error as with integers. For example, the number 2.5 equals 0.625 × 22, the fractional part of which has an exact binary representation. 2 However, the number 2.6 does not have an exact binary representation, and only be represented approximately in floating point. In single precision floating point numbers, which require 32 bits (one byte for the exponent and the remaining 24 bits for the mantissa), the number 2.6 will be represented as 2.600000079.... Note that this approximation has a much longer decimal expansion. This level of accuracy may not suffice in numerical calculations. Double precision floatingpoint numbers consume 8 bytes, and quadruple precision 16 bytes. The more bits used in the mantissa, the greater the accuracy. This increasing accuracy means that more numbers can be represented exactly, but there are always some that cannot. Such inexact numbers have an infinite binary representation. 3 Realizing that real numbers can be only represented approximately is quite important, and underlies the entire field of numerical analysis, which seeks to predict the numerical accuracy of any computation.

Exercise 5.2.2

What are the largest and smallest numbers that can be represented in 32-bit foating point? in 64-bit foating point that has sixteen bits allocated to the exponent? Note that both exponent and mantissa require a sign bit.

So long as the integers aren't too large, they can be represented exactly in a computer using the binary positional notation. Electronic circuits that make up the physical computer can add and subtract integers without error. (This statement isn't quite true; when does addition cause problems?)

- 瀏覽次數:3540