The Sampling Theorem says that if we sample a bandlimited signal s (t) fast enough, it can be recovered without error from its samples s (nTs), n ∈{..., −1, 0, 1,... }. Sampling is only the first phase of acquiring data into a computer: Computational processing further requires that the samples be quantized: analog values are converted into digital (Section 1.2.2: Digital Signals) form. In short, we will have performed analog-to-digital (A/D) conversion.

A phenomenon reminiscent of the errors incurred in representing numbers on a computer prevents signal amplitudes from being converted with no error into a binary number representation. In

analog-to-digital conversion, the signal is assumed to lie within a predefined range. Assuming we can scale the signal without affecting the information it expresses, we'll define this range to

be [−1, 1]. Furthermore, the A/D converter assigns amplitude values in this range to a set of integers. A B-bit converter produces one of the integers {0, 1,..., 2B–

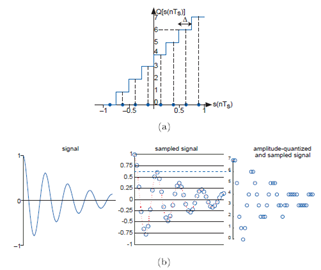

1}for each sampled input. Figure 5.5 shows how a three-bit A/D converter assigns input values to the integers. We define a quantization interval to be the

range of values assigned to the same integer. Thus, for our example three-bit A/D converter, the quantization interval Δ is 0.25; in general, it is  .

.

Exercise 5.4.1

Recalling the plot of average daily highs in this frequency domain problem (Problem 4.5), why is this plot so jagged? Interpret this effect in terms of analog-to-digital conversion.

Because values lying anywhere within a quantization interval are assigned the same value for computer processing, the original amplitude value cannot be recovered without

error. Typically, the D/A converter, the device that converts integers to amplitudes, assigns an amplitude equal to the value lying halfway in the quantization interval. The integer 6

would be assigned to the amplitude 0.625 in this scheme. The error introduced by converting a signal from analog to digital form by sampling and amplitude quantization then back again would be

half the quantization interval for each amplitude value. Thus, the so-called A/D error equals half the

width of a quantization interval:  . As we have fixed the input-amplitude

range, the more bits available in the A/D converter, the smaller the quantization error.

. As we have fixed the input-amplitude

range, the more bits available in the A/D converter, the smaller the quantization error.

To analyze the amplitude quantization error more deeply, we need to compute the signal-to-noise ratio, which equals the ratio of the signal power and the quantization error power. Assuming the

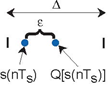

signal is a sinusoid, the signal power is the square of the rms amplitude: power The illustration (Figure 5.6) details a single quantization interval.

The illustration (Figure 5.6) details a single quantization interval.

Its width is Δ and the quantization error is denoted by E. To find the power in the quantization error, we note that no matter into which quantization interval the signal's value falls, the error will have the same characteristics. To calculate the rms value, we must square the error and average it over the interval.

Since the quantization interval width for a B-bit converter equals  we find that the signal-tonoise ratio for the analog-to-digital conversion process equals

we find that the signal-tonoise ratio for the analog-to-digital conversion process equals

Thus, every bit increase in the A/D converter yields a 6 dB increase in the signal-to-noise ratio. The constant term 10log1.5 equals 1.76.

Exercise 5.4.2

This derivation assumed the signal's amplitude lay in the range [−1, 1]. What would the amplitude quantization signal-to-noise ratio be if it lay in the range [−A, A]?

Exercise 5.4.3

How many bits would be required in the A/D converter to ensure that the maximum amplitude quantization error was less than 60 db smaller than the signal's peak value?

Exercise 5.4.4

Music on a CD is stored to 16-bit accuracy. To what signal-to-noise ratio does this correspond?

Once we have acquired signals with an A/D converter, we can process them using digital hardware or software. It can be shown that if the computer processing is linear, the result of sampling, computer processing, and unsampling is equivalent to some analog linear system. Why go to all the bother if the same function can be accomplished using analog techniques? Knowing when digital processing excels and when it does not is an important issue.

- 4300 reads